“So why is iPad so phenomenally successful? Well it turns out that there’s a simple reason for this,†Apple CEO Tim Cook told an audience at the Apple event last week in San Jose. “People love their iPads.â€

The response drew some awkward laughs as it seemed almost like the punchline of a misfired joke. But it wasn’t a joke â€" Cook was absolutely serious.

On the surface, such an answer seems to lack the depth to provide insight into the tablet riddle. After all, there have been many failed tablets before the iPad, and perhaps even more since the iPad. But it turns out that everyone may have been over-thinking it. The iPad is successful because people love it. Said another way, the iPad is successful because Apple was able to create a brilliant product. It’s not about having certain specs or being a certain price. It’s not about checking off boxes. It’s about the product as a whole. It’s the culmination of intangibles which only Apple seems to be able to nail time and time again. There is no question, the iPad has been a phenomenon.

“But we’re not taking our foot off the gas,†Cook continued.

The Fourth Generation iPad

The Fourth Generation iPad

What Apple proceeded to show off was two products. The first, was the iPad we’re all well aware of by now. The 9.7-inch, 1.5 pound slab of glass and aluminum. Even though Apple had just revealed the third-generation of this device (dubbed simply “the *new* iPadâ€) this past March, Apple really wasn’t taking their foot of the gas â€" it was time to show off the fourth generation of the device.

Truth be told, the fourth generation of the iPad isn’t all that different from the third-generation model. It’s more of an “iPad 3Sâ€, if you will. I don’t mean that as an insult, or to downplay the update, but this is primarily an under-the-hood update. It’s all about taking a great product and making it better.

I’ve been playing with this latest version of the iPad for the past week. Yes, it’s faster. Apple claims 2x CPU and graphics performance thanks to the new A6X chip. That claim has been a little hard to test since no apps are yet optimized to take advantage of the new power â€" and mainly because the previous iPad was already so fast â€" but things do generally seem to launch and run a bit faster than they do on the third-generation iPad. I did get a chance to see a demo of a game that was optimized for the new chip (though it’s not out yet) and that’s clearly where this new iPad is going to shine.

For now, one primary way you’ll notice that this iPad is better than the last version is in the front-facing camera. Previously, it was a VGA-quality lens (0.3 megapixels). Now it’s HD-quality (1.2 megapixels), capable of capturing 720p video. This is key for FaceTime. Apple has slowly but surely been rolling out FaceTime HD video capabilities across all their products. Now the iPad is on board as well.

The new version of the iPad also gets the update to Apple’s new Lightning connector, matching the iPhone 5 and the new iPod touches and nanos in this regard. This makes the bottom of the device look a little cleaner, but connection performance is the same.

The real key to the Lightning connector may be that it allowed Apple to tweak the internals of the iPad, since this new connector takes up much less space. As a result, we get better performance while maintaining the same, awesome 10-hour+ battery life. Perhaps more importantly, the new iPad doesn’t seem to run as hot as the last version did.

While “heat-gate†(“warm-gate�) was yet another overblown situation surrounding Apple a few months back, the temperature of the device at times was noticeable (though far less than any laptop, for example). Now it seems less so. Or maybe my hands have just grown callouses due to my lack of oven mitt wearing while handling the device. Hard to say.

If you were going to get an iPad before, obviously, you’ll want to get this one now. In fact, you don’t even have a choice â€" Apple has discontinued the third-generation model. The prices remain the same across the board as do all of the other features (WiFi/LTE, Retina display, etc).

Yes, it is kind of lame for those of us who bought third-generation models that Apple updated the line so quickly, but well, that’s Apple. To me, the fourth-generation leap doesn’t seem to be nearly as big as the leap from the first to second generation or from the second to third generation, so perhaps take some solace in that.

It simply seems like Apple decided they wanted to push out a new iPad version before the holidays with the new connector and gave it a spec boost as a bonus. Why not do more? Because they don’t need to right now. As Phil Schiller put it on stage, “We were already so far ahead of the competition, I just can’t… I can’t see them in the rearview mirror.†Maybe the Nexus 10 and the larger Kindle Fire HD changes that. Or maybe not. It will be interesting to see what, if anything, Apple does in the spring, their typical time to push major iPad changes. My guess is that this is a boost intended to hold them over until a year from now.

The iPad mini

The iPad mini

“It’s all about helping customers to learn about this great new technology and use it in ways they’ve never dreamed of. So what can we do to get people to come up with new uses for iPad?,†Schiller asked on stage as the fourth-generation iPad rotated to reveal the main event: the iPad mini.

I’ve also had the chance to try out the iPad mini for the past week. My thoughts are much more straightforward here: Yes.

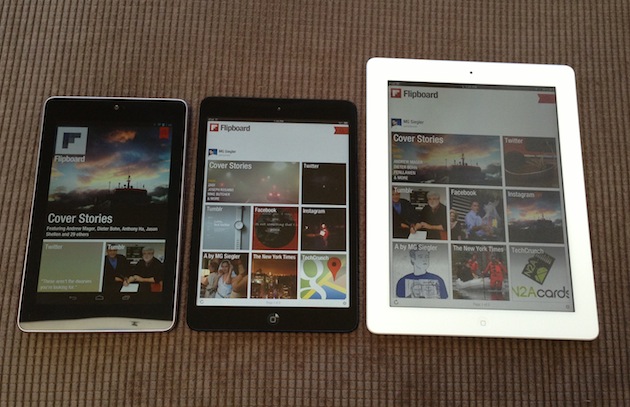

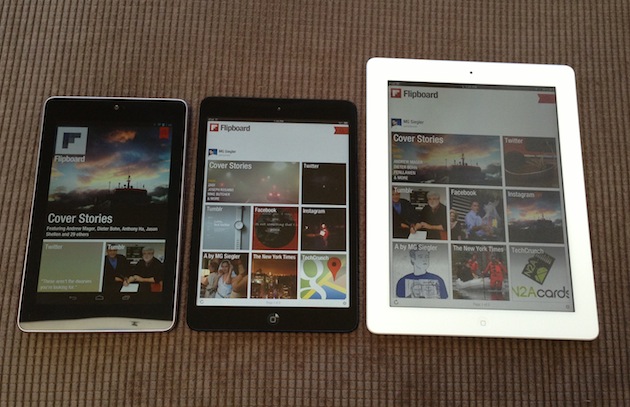

The iPad mini isn’t perfect â€" for one reason in particular (more on that below) â€" but it’s damn close to my ideal device. In my review of the Nexus 7 (which I really liked, to the shock of many), I kept coming back to one thing: the form-factor. Mix this with iOS and Apple’s app ecosystem and the intangibles I spoke about earlier and the iPad mini is an explosion of handheld joy.

The first thing you’ll notice is how light the iPad mini is. It’s similar to the effect when holding an iPhone 5 for the first time, though not quite as jarring. At the same time, the iPad mini is far less than half the weight of a full-sized iPad (0.68 pounds vs. 1.5 pounds), so the difference is very noticeable. The iPad mini is not quite as light as a Kindle, though it’s not far from that weight. And it’s lighter than a Nexus 7.

The next thing you’ll notice is how thin the iPad mini is. When you hold it, it almost feels like you’re just holding a sheet of glass. Amazingly, it’s thinner than the iPhone 5. Yes, you read that correctly.

By comparison, the regular iPad feels like you’re holding a full flat-panel monitor. And the Nexus 7 feels like you’re holding a piece of plastic with a screen bolted on. Apple has really nailed the feel of this device with its slightly rounded sides (much less pronounced than the larger iPad), chamfered edge (just like the iPhone 5), and flat aluminum back.

One of the smarter things Apple has done with the iPad mini was trim down the front side bezels of the device. On other tablets (including the iPad), the bezels are extremely noticeable, especially when compared to something like the iPhone, which has basically no side bezel. This detracts from the screen.

The reason for these large bezels is simple: you need somewhere to rest your hands when holding the device. But because you’re likely to hold the iPad mini in one hand, Apple has decided to instead fix the bezel issue with software. iOS smartly recognizes when your hand is placed on either side of the screen (when portrait-aligned) and recognizes that this isn’t a touch meant for input purposes. For people who have been using iOS for a while, this takes some getting used to â€" you think you’re going to trigger something on the screen by placing your palm on the screen. But trust the software.

The truth is that Apple likely had to come up with some solution here because if they had tried to keep thicker bezels with the iPad mini, it would have been awkwardly shaped. As it is, the mini is already quite a bit wider than the Nexus 7. It’s about as wide as it can comfortably be. The bezels would have pushed it over the edge, so to speak.

The reason for this is the screen. As you might imagine, the screen is the single most important factor of the iPad mini â€" and even more so with this tablet in particular. In order for the iPad mini to make sense, Apple felt it needed to maintain the same screen resolution as the iPad 2: 1024-by-768 (more on this below). This meant they couldn’t do a screen closer to the 16×9 ratio that rivals are using for their mini tablets, and instead had to stick with the standard iPad ratio (which is closer to 4×3).

While we’re on the subject of the screen, let’s not beat around the bush â€" if there is a weakness of this device, it’s the screen. But that statement comes with a very big asterisk. As someone who is used to a “retina†display on my phone, tablet, and even now computer, the downgrade to a non-retina display is quite noticeable. This goes away over time as you use the iPad mini non-stop, but if you switch back a retina screen, it’s jarring.

That’s not to say the iPad mini screen is bad â€" it’s not by any stretch of the word. It’s just not retina-level. At 163 pixels per inch, it’s actually quite a bit better than the iPad 2 screen (the last non-retina iPad), but you really can’t compare it to a retina display.

In fact, you can’t really even compare it to the Nexus 7 display, which is 216 pixels per inch. Text definitely renders sharper on that display than it does on the iPad mini. However, the overall display quality (brightness, contrast, color levels) of the iPad mini seems better than the Nexus 7.

If you haven’t used a retina iPad before, the pixel density is unlikely to be an issue â€" after all, it was just a couple years ago that Apple introduced retina displays. And overall, I don’t expect it to be a major issue in the broad market. Remember that the iPad 2 continues to sell very well despite its lack of retina display. But again, it needs to be mentioned as one potential weakness of the device.

If you can get beyond that (and again, I can), it’s hard to find another fault with the iPad mini. When Apple claims that they’ve essentially taken an iPad 2 and put it in this new form factor, they’re not lying. That’s perhaps the most remarkable thing about this device. It’s an iPad 2 â€" a brilliant device in its own right, still â€" at a fraction of the size.

In fact, it’s even better than an iPad 2 in a few respects. The cameras (both front and back) are much better. The WiFi technology found inside is better. There’s an option to get LTE connectivity (though the model I tested did not have this included). The Bluetooth is better. You can get more storage space (up to 64 GB).

I still can’t believe it has the same 10 hours of battery life, but it does. In fact, the battery may even be a little better than the larger iPad. Insane.

The single biggest selling point of the iPad mini is likely to be the app ecosystem. Because the iPad mini runs the same version of iOS as all other iPads, it can also run all the applications that any other iPad can run â€" and iPhone/iPod touch apps too. And because of the aforementioned resolution, it can run all of these apps completely unmodified.

I’m including this paragraph so you’ll take a moment to consider just how important that last sentence is.

The iPad mini can run over 275,000 iPad apps without any modification. And it can run the over 700,000 iOS apps for all devices just as the regular iPad can (at scale or scaled up 2x). Scaled up 2x, some of the apps built for iPhone don’t look great (the text in particular), but that was always an issue with the iPad as well. Luckily, most of your favorite apps have already been tailored for the iPad now.

The iPad mini can run over 275,000 iPad apps without any modification. And it can run the over 700,000 iOS apps for all devices just as the regular iPad can (at scale or scaled up 2x). Scaled up 2x, some of the apps built for iPhone don’t look great (the text in particular), but that was always an issue with the iPad as well. Luckily, most of your favorite apps have already been tailored for the iPad now.

Yes, touch-targets are slightly smaller on the iPad mini than they are on the iPad, but I haven’t had an issue with this. If anything, it’s a little easier to type with the on-screen keyboard because the keys are closer together, in my opinion.

If Apple had only made the iPad mini as a gaming device, I think it would be one of the best-selling gadgets of all time. Some of the iPad games play so beautifully on the iPad mini that you’d think they were custom-tailored for this form factor. Playing games on the regular iPad is great. Playing games on the iPad mini is fantastic because the device is much easier to hold for extended periods of time in the landscape position.

Gaming has already proven to be a massive opportunity for Apple with iOS. The iPad mini is going to expand this possibility. If I were Sony, Nintendo, and yes, Microsoft, I’d be very worried about this.

The iPad mini is also a great media player. You’ll be able to access all the typical iTunes fare, and it’s good for watching videos in particular because again, it’s so light to hold now.

Books, magazines, and reading apps are likely to be another big use-case for the iPad mini. If you can get past the non-retina text resolution, this device is clearly more conducive to reading in bed than its larger counterpart.

So, all of this (beyond the screen caveat) sounds great. Home run, right? In my mind, yes. I can easily see the iPad mini becoming what the iPod mini was to the iPod â€" that is, the version that takes a popular, iconic device and vastly expands its user base. Apple says they have sold 100 million iPads since the initial launch two and a half years ago. That leaves roughly 5.9 billion people on this planet without one. The iPad mini can help that.

Perhaps the biggest question mark in my mind is about the price. Leading up to the unveiling, rumors swirled ranging from $249 to $299 â€" some event suggested Apple may try to lay the hammer down on rivals with a $199 price tag. The reality was much more Apple-like: prices starting at $329.

Apple has grown to be the most successful company in the world because they sell quality devices that people want at a healthy margin. As a result, the profits have rolled in. Last week during their earnings call, Apple made a point of saying that the iPad mini is going to have lower margins than the rest of their products â€" yes, even at $329. That’s not an excuse for the price, that’s the reality of the price.

But how will a $329 tablet fare in a world of $199 tablets? It’s hard to know for sure, but my guess would be in the range of “quite well†to “spectacularâ€. Apple has done a good job of making the case that the iPad mini is not just another 7-inch tablet â€" in fact, it’s not a 7-inch tablet at all. It’s a 7.9-inch tablet â€" a subtle, but important difference. As a result, it can utilize every iOS app already in existence. And it can access the entire iTunes ecosystem. And it will be sold in Apple Stores.

Apple isn’t looking at this as $329 versus $199. They’re looking at this as an impossibly small iPad 2 sold at the most affordable price for an iPad yet. In other words, they’re not looking at the tablet competition. This isn’t a tablet. It’s an iPad. People love these things.

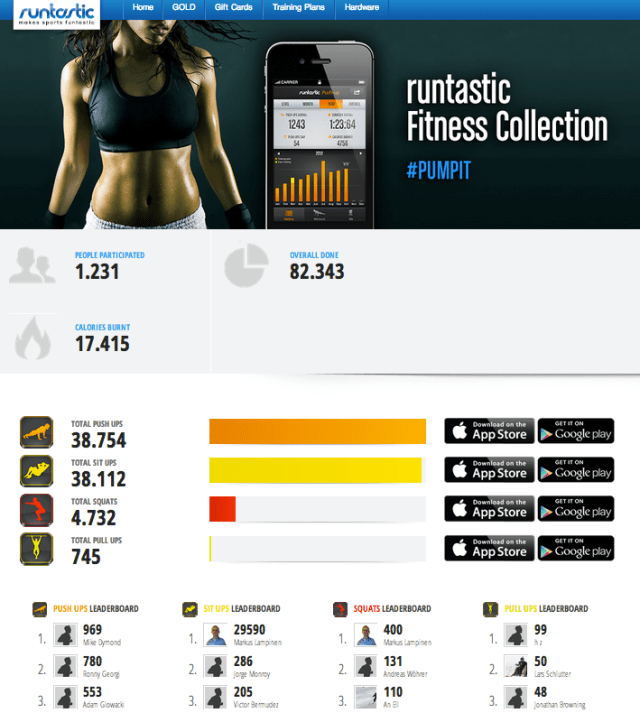

Thus far, the company has released nine free and paid apps (thirteen with its latest additions), with its most popular app being its namesake (Runtastic), which allows users, among other things, to connect to their social media accounts so they can compete with friends, share updates and post pictures. It also offers Nike+-style audio feedback (like words of encouragement during routines), along with a number of post-exercise features that enable users to comment on their exercise, leaving notes about the run, the weather, conditions of the trail, etc., with the ability to return to that stored info in the future.

Thus far, the company has released nine free and paid apps (thirteen with its latest additions), with its most popular app being its namesake (Runtastic), which allows users, among other things, to connect to their social media accounts so they can compete with friends, share updates and post pictures. It also offers Nike+-style audio feedback (like words of encouragement during routines), along with a number of post-exercise features that enable users to comment on their exercise, leaving notes about the run, the weather, conditions of the trail, etc., with the ability to return to that stored info in the future. As for its new Fitness App Collection, each focuses on a specific indoor workout routine, offering training plans developed by fitness experts to help users improve strength and stamina by working toward a set number of repetitions, be that 20 push-ups or 50 pull-ups. Using your phone’s proximity sensor and accelerometer, the apps count the number of repetitions you complete, offering voice coaching and an automatic timer to create the sensation that you’re working out with your own (virtual) personal trainer. Users can buy the training plans in-app or use the app without the plans for free.

As for its new Fitness App Collection, each focuses on a specific indoor workout routine, offering training plans developed by fitness experts to help users improve strength and stamina by working toward a set number of repetitions, be that 20 push-ups or 50 pull-ups. Using your phone’s proximity sensor and accelerometer, the apps count the number of repetitions you complete, offering voice coaching and an automatic timer to create the sensation that you’re working out with your own (virtual) personal trainer. Users can buy the training plans in-app or use the app without the plans for free.  For those Android users still rolling their eyes, it’s also worth checking out the new PRO version of Runtastic the company launched earlier this month, which, thanks to Google Earth integration, allows users to watch their runs or bike rides after they happen â€" in 3D video. Users can view birds-eye views of the course they followed, reliving each exciting moment as-recreated-by Google using GPS data â€" and served with pace, time and elevation on-screen, live in 3D.

For those Android users still rolling their eyes, it’s also worth checking out the new PRO version of Runtastic the company launched earlier this month, which, thanks to Google Earth integration, allows users to watch their runs or bike rides after they happen â€" in 3D video. Users can view birds-eye views of the course they followed, reliving each exciting moment as-recreated-by Google using GPS data â€" and served with pace, time and elevation on-screen, live in 3D.

The Fourth Generation iPad

The Fourth Generation iPad The iPad mini

The iPad mini

The iPad mini can run over 275,000 iPad apps without any modification. And it can run the over 700,000 iOS apps for all devices just as the regular iPad can (at scale or scaled up 2x). Scaled up 2x, some of the apps built for iPhone don’t look great (the text in particular), but that was always an issue with the iPad as well. Luckily, most of your favorite apps have already been tailored for the iPad now.

The iPad mini can run over 275,000 iPad apps without any modification. And it can run the over 700,000 iOS apps for all devices just as the regular iPad can (at scale or scaled up 2x). Scaled up 2x, some of the apps built for iPhone don’t look great (the text in particular), but that was always an issue with the iPad as well. Luckily, most of your favorite apps have already been tailored for the iPad now.